Fire and Forget Introducing Label Bot

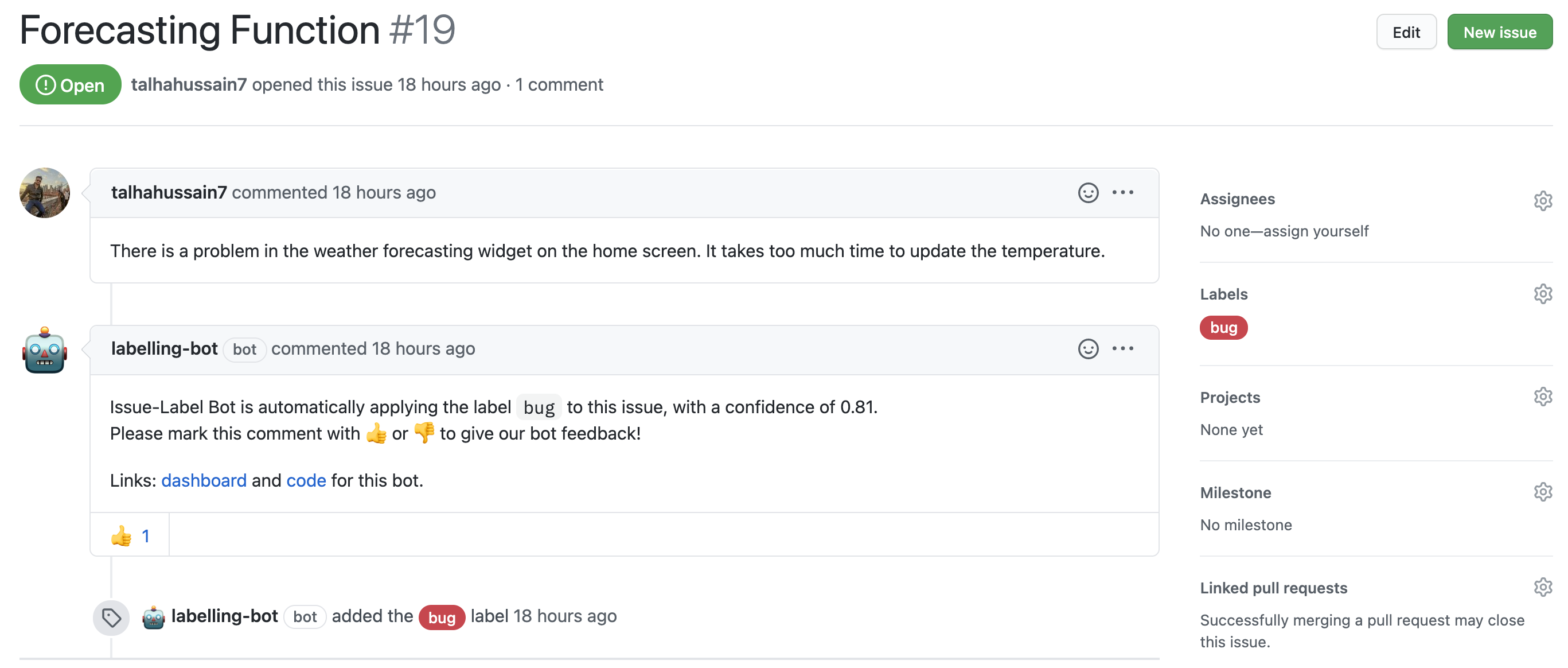

It uses machine learning to read the comment body and title to estimate what the issue might be about, and then labels the issue following the publishing of a short comment notifying the issue creator of the same.

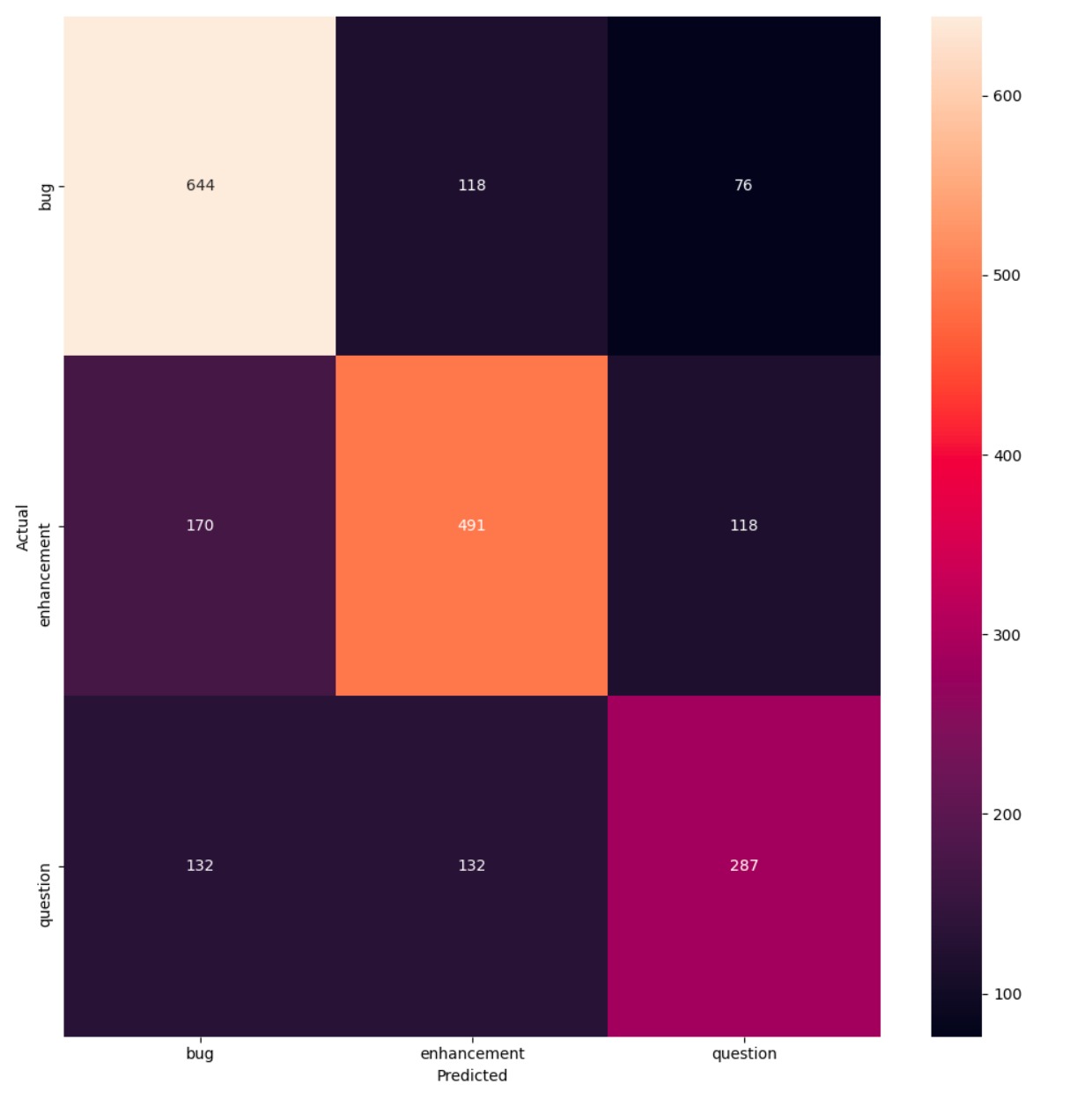

When an issue is opened, the bot predicts if the label should be a: 🐞 bug, 💡 enhancement or ? question labels and applies a label automatically if appropriate.